AI Trade Anniversary: Nvidia Is King - Challengers Are Coming

The AI trade has been monstrous for key players, notably Nvidia. But a shift has just begun, and perhaps the king of the hill could get toppled

On November 30, 2022, OpenAI released a statement that they were launching a new Large Language Model (LLM) that was described as “a model called ChatGPT which interacts in a conversational way”. This ushered in Artificial Intelligence, a.k.a., AI.

Three years later, a lot has changed, and likely the world will never be the same.

There has been an arms race by different enterprises to build out their own versions of an LLM as well as the necessary data centers to house these services.

Building these data centers requires one key component: GPU processors. From that, there is one clear winner to date in the AI arms race: Nvidia.

Nvidia designs the most-used GPUs for AI—the manufacturing is outsourced to TSMC or Samsung Electronics. Because of the high demand for these chips, revenue, price, and profits for Nvidia have increased massively in the past three years.

Nvidia’s stock has increased some 1,400% in the past three years—A $10,000.00 investment in NVDA stock on Day 1 would now be worth ~$125K, an approximate 12x in three years.

Nvidia, for its own, continues to maintain that demand for its products are strong and growing with revenue increases hitting 60% in the latest quarter released just two weeks ago.

But cracks are starting to form—investors are getting anxious that there may be a bubble. These cracks may not be in the bigger picture of the AI trade itself, per se, as much as any one individual company over another.

Just last week, Meta Platforms announced they intend to acquire some of Alphabet’s—parent company of Google—TPUs to use in their data centers. This is a seismic shift, and while the buildup for AI data centers is still on, Nvidia’s hegemony of GPUs may have just been tarnished.

The important thing about the shift in Meta to Google’s TPUs is that this shows there are alternatives to Nvidia’s chips that are slightly less costly up front, and because of a higher efficiency, less costly to companies over the longer term.

I still maintain that the AI trade has a long way to go and that the build up will still occur. For now, companies will continue to build their data centers, and then provide their respective services. But the fact that there is an alternative to Nvidia’s chips means that there may be less demand for Nvidia products. That could be the start of a domino tipping.

NVDA stock is priced for continued gains in revenue and profits. What happens if the revenue growth rate wavers? What happens if overall demand starts to level out, or worse, go down?

Other companies would obviously be in the race to grab market share. AMD has its own GPUs and may be putting together more efficient processors. Any unexpected announcement from AMD in this arena could be a shattering shift for NVDA stock.

Then there is the rented data center business: Is it possible that by building massive data centers, some companies that are using Nvidia GPUs could build the services to compete with Nvidia?

While my focus is more on macroeconomic activity and how that translates into moves in the broader stock market, the AI trade has outpaced the general economy. The government shutdown had completely stopped the flow of economic data, that flow is restarting. Notwithstanding that position, the AI buildup is easily a massive shift in the economy and its effects need to be focused.

AI Is Not The Economy

It is important to note a few things here:

AI is not the economy

There are not many spillovers from the AI buildup that have driven the broader economy

AI is not the economy. The US economy is over 70% driven by consumers. Those consumers are feeling strains from tariffs and confidence is near all-time lows.

Consumers themselves account for the lion’s share of growth in a normalized economy. If the rate of growth in personal incomes increases or decreases, you can expect an in-kind shift in expenditures. This, then, drives business expansion or contraction. You can see this in both personal incomes & personal expenditures, as well as in the M2 Money supply.

Within a fractional banking system, if businesses are taking on a growing or shrinking rate of loans, this expands or contracts the money supply.

The M2 money rate has topped out somewhat.

But you also have to take into consideration that business expansion will generate its own economic activity. However, this is more of a non-linear reaction, and this is shown with the massive buildup of AI infrastructure, and the lack of in-kind economic expansion.

What we are seeing is that the tariffs are draining the consumer’s capabilities while at the same time, massive buildup of AI data centers have not spilled over to overall economic activity.

All of that may shift quickly as the US Supreme Court is likely to strike down the tariffs as an unconstitutional move by the executive branch.

Imagine what kind of economic activity will occur once the consumer is unburdened by the tariffs?

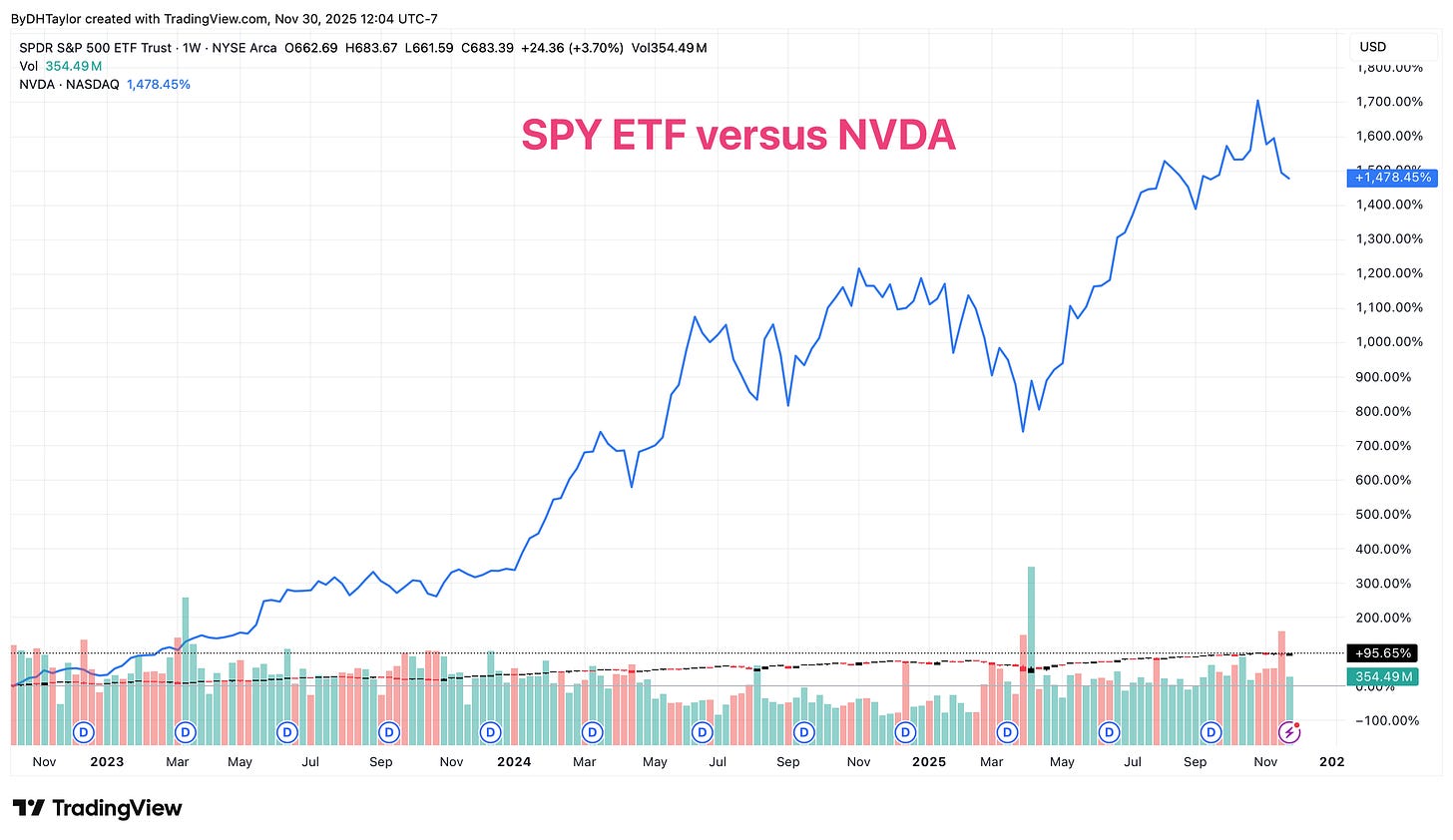

SPY Versus NVDA

Above is the chart on SPY ETF, the broader S&P 500 ETF, versus NVDA stock since November 30th, 2022. While the S&P has had remarkable gains during this time, these gains are dwarfed by NVDA stock’s 1,400% gain.

Nvidia states that demand still exists for its GPU chips. The company is backlogged with orders. But investors are getting nervous.

Why the inventory buildup?

In its latest financial statements, Nvidia has seen a buildup in its inventory. This begs to question: If the company is backlogged, why is there a buildup in inventor?

Why the Receivables Lag?

Another key factor in the financials that I saw was a lag in accounts receivables, which in itself, is not necessarily a noteworthy element. This could be transitory, and nothing more. Still, this begs to question which companies are lagging. Certainly, the Metas & Microsofts of the acquisition world would not need to float a CapEx purchase. And considering the massive size of these companies, and their overwhelming size of their purchases, what are the companies that are dragging down this metric?

For me, I am sticking with Nvidia’s own guidance that they see continued, and long-term demand for its chips… for now.

The Problem With Nvidia Being So Big

The math is tricky. NVDA stock represents about 7%-8% of the S&P 500 on a weighted index—that will change by the minute as the price of NVDA fluctuates.

While there is some consternation with the potential of an AI bubble, NVDA’s valuation still remains within reach of the overall stock market. Forward P/E ratio for the broader stock market are 22.5x, whereas the forward P/E for NVDA is 28.5x.

My take on this overall is that if Nvidia starts to lose business, it is not because the AI buildup is slowing as much as it that the companies demanding its chips are shifting to alternatives. AMD may very well grab a chunk of this shift, and Alphabet is already taking some of this.

Regardless of which company finds itself the new AI chip maker du jour, the demand will persist… for now—I want to see about profits! Nvidia is certainly there, but the services revenue stream needs to start growing in order to continually push demand.

Google: Move Over Nvidia

Thanksgiving week saw the announcement from Meta Platforms that they were purchasing TPUs from Google parent Alphabet for its AI data center. While Meta has already amassed a large quantity of GPUs for its data center, the shift to purchasing some TPUs can be a bit of an earthquake.

As I mentioned previously, TPUs are more efficient and have lower up-front costs. But they are less well-rounded. From all of the articles that I have read, a good balance is likely to be a focused objective for all involved.

For its own, OpenAI is hastily worried about its product offerings. Google’s Gemini 3 performs better. But there is more to this story: With Google’s data centers, it now controls the entire ‘stack’ with the hardware & software. And, their products are better than the first to arrive: OpenAI.

The services end of all of this is where I have said the real money is. Companies are hastily building up their respective data centers and working to improve the products. Nvidia is needed up front to build these data centers. I do not see NVDA stock as a bubble, as so many have feared. Instead, I see it as a company that is offering the necessary foundation to get these data centers up and running, but the eventuality is that the companies will have what they need, and the growth rate will dissipate.

With Google getting a bigger slice of the data center buildup, this will push GOOG stock further as its own revenue increases from other companies purchasing its TPUs. With its Gemini being a better product than OpenAI, Google stands to gain a lot more over the longer term.

Then there is the fact that Alphabet offers far more than just search. Google has a massive foundation of offerings that search will augment. Search, to me, is a feature, not a business. Google understood this decades ago and built an entire empire starting with search. I am looking to OpenAI scrambling for its life over the longer term. Google will catch and retain all that overflow business.

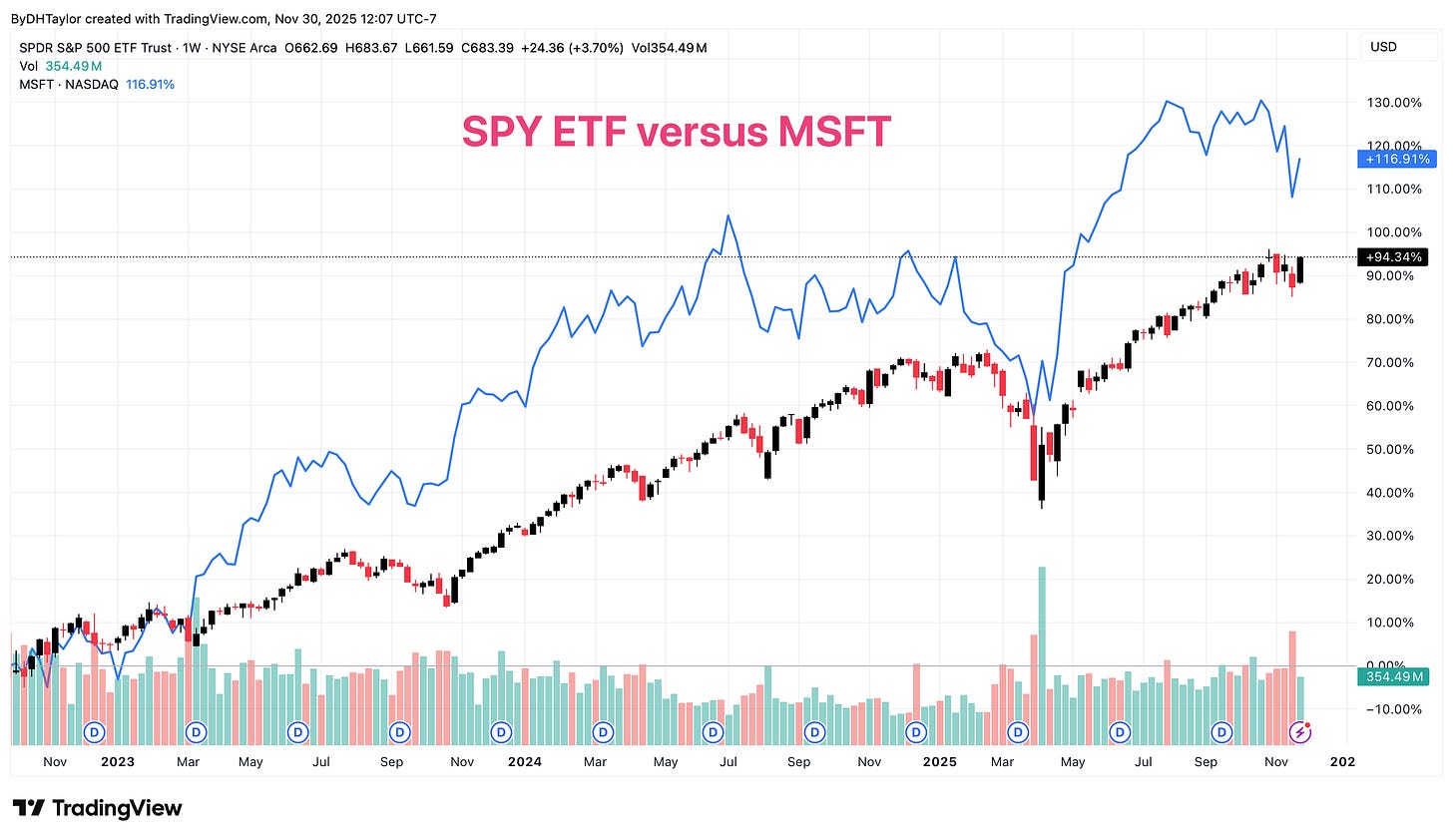

Microsoft: The Biggest Nvidia Customer

Who is the biggest customer for Nvidia products… which in turn means which company is in the forefront of offering AI products? Microsoft. The behemoth is slated to be building up some $250B for data centers and cloud potential. As the ‘customer’ of AI buildup, the actual profits have yet to really materialize. This is where I ponder what happens next with AI.

First, research is showing us that users are not using AI for productivity gains, but pondering esoteric questions in life. There has only been about an 8% conversion rate for users to pay for premium search. That makes me question the buildup and its potential payoff.

The sums in total are astronomical with how much is being spent on AI data centers. Paying for the data centers, the up-front build, then the ongoing costs for a mass amount of electricity needs for all of the searches goes on top of that. But if each user is not paying for premium pricing for their searches, what pays for the costs?

Microsoft, just as Alphabet & Meta Platforms, have additional offerings that can buffer the overall costs of any one individual search. Microsoft is enterprise, and has a better ability to provide enterprise-focused product offerings that would draw in revenue.

Already, Azure is seeing revenue gains—however, there is a downgrade just this week. There has not been enough conversion of users to premium pricing usage; everything is essentially being given away for free.

Meta

Meta Platforms stock has surged with the AI boom, as shown above. Meta’s growth is far more than Microsoft and Google, which I am hesitant to see how that is. On the one hand, it is the ecosystem that could drive continued profits for Meta over the hardware buildup pace. But is Meta Platforms better positioned than Microsoft & Google?

Meta is far more consumer-based than Microsoft. While Meta has seen solid gains for its AI-related ad revenue, those gains are starting to shrink in their growth rate.

Then you have to account for the overall AI-arms race investments, and the profits are not justifying the costs. There are well-founded concerns that Meta’s buildout is too much, and that the costs will overwhelm profits from taking on substantial debt.

Energy Consumption is Driving Costs

The next big factor of the AI buildup is the energy consumption these companies will need. I am fairly balanced when it comes to breaking down all 11 sectors of the S&P 500. The energy sector is of big interest me because of the shifts we will be seeing. The AI data centers are going to be massive consumers of energy.

Expectations are that over the next 5 years, the AI data centers energy demand will double. This will represent a total of 12% of all US energy demand. But that demand shift will necessitate increased investment in energy. That 12% is in addition to what already exists.

Energy expansion capabilities in the United States are projected to grow some 75GW-125GW in the following 5 years, with an additional 125GW slated by 2035 on top of these initial projects.

After these two layers are added to the overall grid, AI data centers are expected to be demanding 30%-40% of all energy in the United States.

You can be assured that I will be following these projects heavily over the following decade as these investments represent opportunities for financial gains.

My Take

Nvidia itself may be in some form of trouble as its stock price is valued at a level where there is continued increases in revenue and profits. Nvidia designed the necessary hardware to sell its chips with TSMC and Samsung producing the chips. But now other options are available and end-users are starting to expand their

Then we begin to play the what-if game: What if AMD were to announce something this week that would be less costly for data center builders both up front and long term? NVDA could crack hard. But another may take its place. And while there is likely to be a shift going back and forth, based on overall demand, NVDA valuation remains within a reasonable reach of normalized valuations.

The overall buildup is what I am focused on at this stage, and that appears to be on its continued path. Energy consumption is something that I will b e focusing on as well, and that could be a potential boon for investors.